The Developer Experience for Building with AI

Choosing the right tools for the job; or at least knowing what to look for...

Have you had an app idea for using AI recently? Maybe you were thinking about a little program where you could take a PDF and chat with it.

Odds are, someone has thought about your idea. Maybe they are building it right now. Maybe they raised $10 million in VC money and are on track to be a major disruptor.

I say this not to discourage, but rather to push you to pursue your idea in building an app that is powered by AI. For starters, most startups fail, that is a known fact. I want to draw your attention away from making money, and focusing on problem solving (you know, that thing that programming was designed to help do in the first place).

It is well documented that state of the art AI does better in niche environments, rather than generalized ones. We aren’t quite at Skynet, Borg, or any other scary depiction of our inevitable future. So, what is your niche? What have you seen that other people probably haven’t seen, or if there are other people who have seen it, what do you have that is different? If I were to guess, the fact that you are reading this means that you 1) are a developer and 2) are interested in AI, both of these qualifying factors already put you in a very small percentage of humans, so what else do you do?

When you understand what it is that makes you different, your app idea should start to make sense. In the startup world, this is called “founder market fit". While I, breadchris, might be able to write a better PDF chat bot than you today, if you worked in the medical profession and knew exactly what PDFs doctors would want to have a chat bot with, the requirements for handling those documents, and who to talk to to sell your chat bot idea to, you would have months of head start on me.

All of this to say: if you are a programmer and you have an AI app idea, freaking build it.

Now that you really want to build an app, you will ask: “well, how do I build it?”

Excellent question, everyone else is still trying to figure that out too.

I’m going to need some context

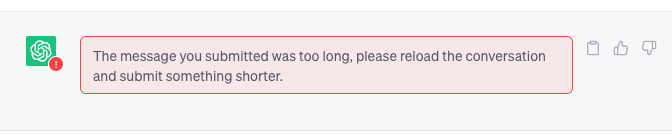

Consider our PDF chat bot. You might have tried a small version of this where you copied the contents of the PDF into ChatGPT and then asked questions. If the PDF was too long you would have seen this:

Since LLMs have a maximum token context size, we run into our first problem: how do you ask questions about a bunch of content?

There are two approaches, we could fine-tune an LLM (slow and expensive, but accurate) or we can get creative about how content is loaded into the context window (fast and relatively cheap, could miss out on information).

I have not spent much time on the former for two reasons. I am not an ML expert and the space is moving very quickly, what I do today may be obsolete tomorrow. The second being, I believe that data collection and indexing is going to build data sets which will be invaluable for training models, and that is something I think I can do pretty well. I will pass over my research into this space in this post, but I will include this in a future article.

For loading content into the context window, what you will be doing is building an agent.

Building Agents

You can think of an agent as something that goes and does things for the ML model on its behalf. There are some ideas floating around regarding how to build agents. Microsoft has proposed theirs with the Semantic Kernel. It seems that most people have flocked to either LangChain or Llama Index as their agent framework of choice.

LangChain burst onto the scene as the go-to framework for integrating AI into an application. Having used it a bit, there are certainly great ideas in this framework. In terms of what I find valuable about this project, I would highly recommend reading through the docs. Unfortunately, I find the code to be confusing. Outside of maybe whipping out a quick POC of an idea, I would not recommend using this library when building larger applications. To me, it seems that LangChain’s primary focus has been to integrate as many tools and ideas as possible, many of which solve the same problem.

Llama Index I personally find to be a project whose code is much more conducive to building applications on top of. While seemingly similar to LangChain, the approach to the problem of loading content into the context window is a lot more succinct, and by extension more powerful. Having well defined concepts, such as composable indexes, Llama Index has a distinct advantage over LangChain, in my opinion, in the sense that the abstractions that exist are more meaningful and easier to extend.

Another one that does not have anywhere near enough attention is haystack. What has especially caught my eye are the extensive tutorials they offer. Haystack is also not just focused on LLMs. They have a deep catalog of capabilities for training different types of models which can help you move away from depending on LLMs for all of your app’s needs.

If you have had your ear to the ground, you have probably heard about vector databases for solving the context problem. My company and I have written a post about the various options that are available. Vector databases are helpful, but be careful about the hype here. Traditional ranked search algorithms, such as bm25, are still relevant in the AI age.

If you care about your agent producing structured data (such as json) or need it to pick from one a few options (and not make up its own), there are a few options. I originally stumbled upon LMQL which is an entire language for formally expressing your wishes for what ChatGPT would get back to you with. OpenAI has added function calling to their API which means you can build your own plugin system on top of ChatGPT. Microsoft has guidance for more robust control over the output.

If there is a single piece of advice that I can give you that will be the most impactful to you, it is to spend time learning how to prompt engineer. Services, frameworks, and models will come and go, but understanding how to control the firehose of latent space is not going anywhere. You can get closer to what you want out of an LLM model when you understand how they work and what you need to tell them.

More to come

There is much more here to explore here with agents, especially on the technical side. I will leave this here for now, and look forward to hearing about what interested you about this piece and if there is anything you would like for me to elaborate on!

Fascinating stuff!